Gain flexibility and choice at the edge with Red Hat

The edge spans many locations, each with different needs

Intelligent applications can help you deliver high-value, differentiated experiences to better connect with your customers and partners. These applications often require fast, secure access to data and compute resources. As a result, many organizations are deploying applications and workloads at the edge to reduce latency, trim costs, and gain faster insights.

Edge computing shifts compute processes from the datacenter to endpoints at remote locations. It is often used for latency-sensitive applications, applications that must run in locations that are periodically disconnected from central datacenters, and situations when regulatory and compliance requirements limit where data may travel and reside.

Red Hat views edge computing as a natural extension of hybrid cloud environments, operations, and strategies. Incorporating edge deployments into hybrid cloud environments can provide end-to-end consistency, simplified management, and immense scalability. You can operate and administer your entire environment — from core to edge — in the same manner to streamline development, deployment, and management. A hybrid cloud approach that includes edge computing can also help you develop and implement security processes and tools to protect your applications, infrastructure, and business across all locations.

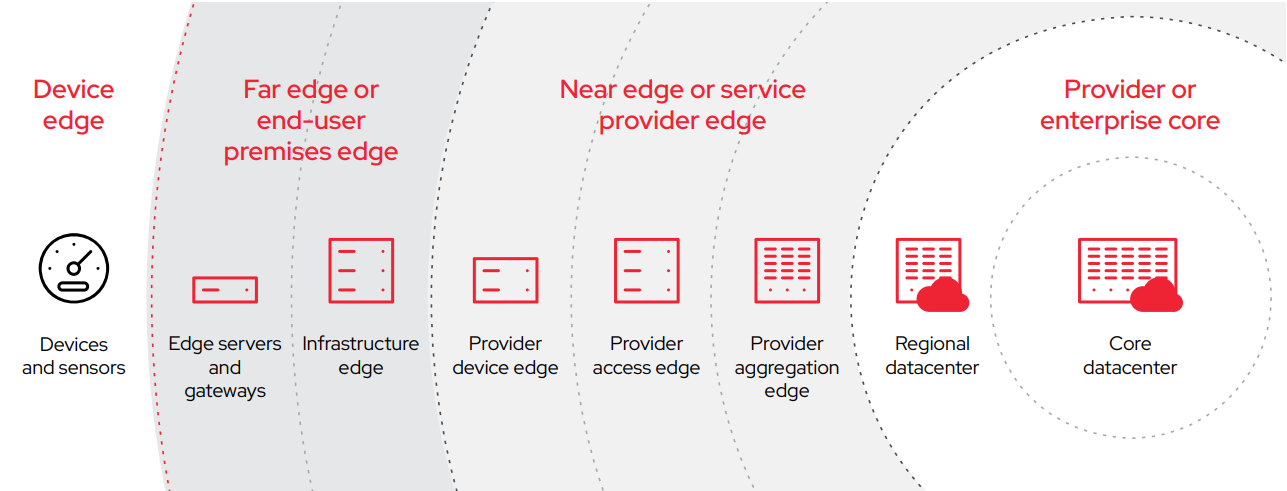

The edge is not a single, defined location — there are many different edge tiers, each with different properties and requirements. For example, edge locations vary in physical size, available power and cooling capabilities, and network connectivity. They can also vary in the number of staff and staff skills available on-site. In general, as you move further out from your core datacenter, the latency in communication between edge and core locations increases. As a result, each edge deployment is unique.

Gain more choice for your edge deployment

Red Hat takes an open approach to deliver the capabilities, flexibility, and expertise needed to make your edge implementation successful. We offer integrated platforms and tools that let you consistently and efficiently deploy, manage, and protect workloads at scale from your core to any edge. Plus, our certified partner ecosystem lets you deploy the third-party hardware, software, and solutions your business requires with confidence, so you can build complete edge stacks and solutions.

Within the next 12 months, 61% of organizations are planning to use edge computing and Internet of Things (IoT) technologies separately or in combination.1

Together, Red Hat® platforms and technologies form a common, flexible, scalable foundation that works across your entire hybrid cloud and edge environment. Key benefits include:

- Consistent operation. Deploy and manage applications, data, and clusters in the same manner, regardless of location and underlying hardware, with a unified platform and centralized management and automation tools.

- Integrated security. Protect your workloads by integrating security throughout your application life cycles, automatically enforcing security policies, and using declarative resource definitions to ensure compliance from core to edge.

- Scalable automation. Automate common tasks to operate more efficiently and simply at scale — even in environments with thousands of edge locations.

- Unified management. Gain visibility and insight into all applications, clusters, and locations in your environment with unified, intelligent management capabilities.

- Application portability. Build and deploy applications that can run across your environment without change, and move them as needs and conditions change.

Choose deployment topologies that fit your needs

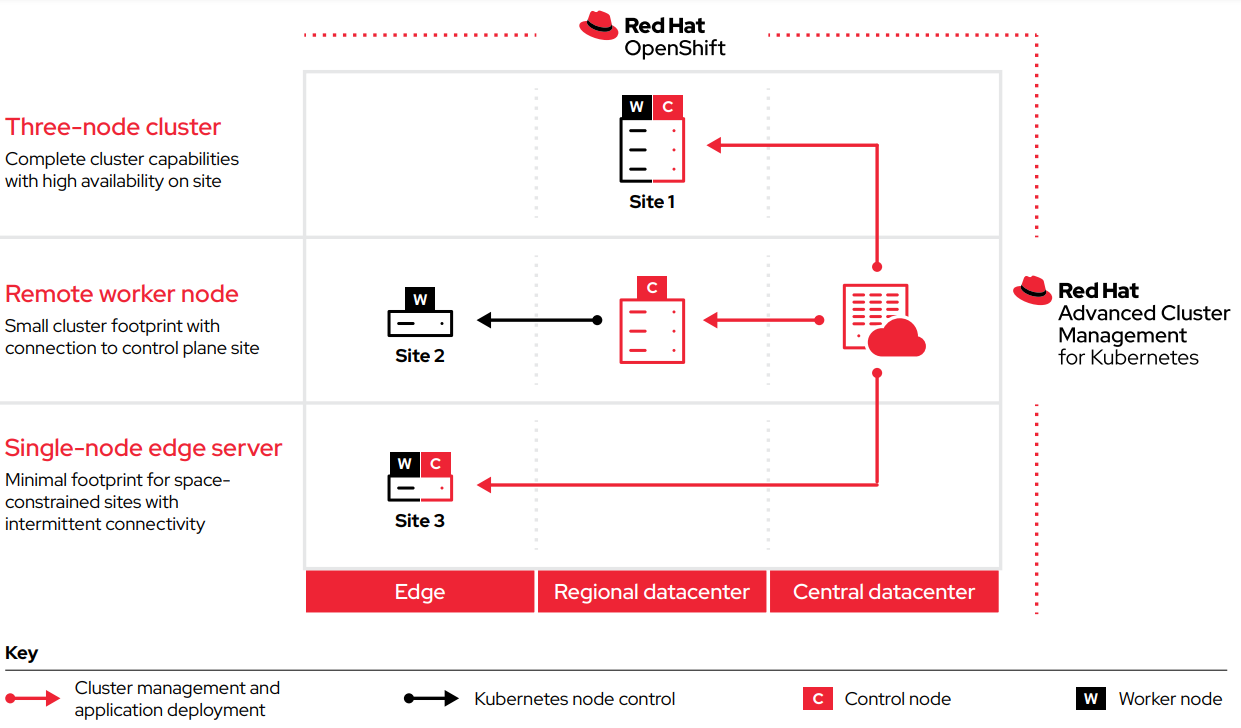

To meet the varying physical space, power, cooling, and connection constraints of edge locations, Red Hat solutions, based on Red Hat OpenShift®, can be deployed in three edge topology options: three-node clusters, remote worker nodes, and single node edge servers. These topologies give you all of the capabilities of a full Kubernetes platform in lower cost, smaller footprint options that let you deploy and manage applications consistently across edge tiers. Each one connects to your central datacenter control plane for unified management via Red Hat Advanced Cluster Management for Kubernetes. All topologies also include Red Hat Advanced Cluster Security for Kubernetes to protect your applications and infrastructure. Each topology can address a variety of edge use cases, and you can mix and match topologies and move applications and data across edge tiers and locations to meet your needs over time.

Common edge use cases

Organizations can use edge computing for many uses and applications. Examples include:

- Predicting equipment failures before they occur.

- Performing preventative maintenance.

- Reducing unplanned downtime due to failures.

- Tracking user behaviors and preferences.

- Delivering user offers and discounts in real time.

- Deploying software-defined network (SDN) functions and services.

- Providing edge services to enterprises and other end customers.

Three-node cluster topology

The three-node cluster topology is a reduced-footprint, fully autonomous deployment of Red Hat OpenShift using three servers that each act as both control plane and worker nodes. The three-node cluster is connected to your core datacenter for unified management and control.

Benefits of the three-node cluster topology:

- High availability. Each of the three servers has both control plane and worker node capabilities, allowing work to continue in the event of a failed or offline node.

- Smaller footprint. Three servers require less space, power, and cooling than a larger cluster.

- Local management. Because three-node clusters contain both control plane and worker nodes, you can manage your cluster directly from your edge site.

Opt for the three-node cluster topology when you need complete cluster capabilities with high availability on-site. Because this topology creates a complete functional cluster, you can also deploy it at locations with intermittent or low-bandwidth connections to your core datacenter. This topology is often used for regional datacenters and locations.

Minimum requirements for three-node clusters:

- Three physical servers each with six CPU cores, 24GB of memory, and 120GB of disk space, running Red Hat Enterprise Linux® CoreOS.

Remote worker node topology

The remote worker node topology consists of physically separated control plane and worker nodes. The control plane nodes are often located at a larger site like a regional or core datacenter, while the worker nodes are distributed across smaller edge sites. Stable connectivity between control plane and worker nodes is needed to allow continuous management of the worker nodes. A three-node cluster can also function as the control plane for remote worker nodes.

Benefits of the remote worker node topology:

- Distributed footprint. Because control plane and worker nodes can be located at different sites, you can deploy a very small footprint — even just a single server — at edge locations. All resources in worker node servers can be used for workloads, increasing efficiency.

- Centralized control. Your control plane nodes can manage thousands of remote worker nodes as a single environment, simplifying administration.

- High availability. While control plane and worker nodes are not co-located, this topology still provides the resiliency of a complete, highly available cluster when multiple worker nodes are deployed together at a site.

Choose the remote worker node topology when you have very limited space at your edge sites, but still have a strong, stable connection to your control plane site.

Minimum requirements for remote worker nodes:

- One or more physical servers each with two CPU cores, 8GB of memory, and 120GB of disk space, running Red Hat Enterprise Linux or Red Hat Enterprise Linux CoreOS.

Single-node edge server topology

The single-node edge server topology creates a fully functional Red Hat OpenShift deployment on a single server that acts as both a control plane and a worker node. In this topology, Red Hat OpenShift addresses the hardware of only one server and runs on bare metal, providing extremely high performance and efficiency. Single-node edge servers can connect to your central management cluster for unified administration. If the connection between your edge site and core fails, single-node edge clusters continue to operate and manage themselves independent of your central management cluster.

You can deploy single-node edge servers manually or use the zero-touch provisioning capabilities of Red Hat Advanced Cluster Management with an assisted installer.

Benefits of the single-node edge server topology:

- Smallest footprint. Your entire deployment fits on a single server, requiring minimal space, power, and cooling.

- Operational independence. There is no dependency on your centralized control plane with single-node edge servers. The control plane on the edge server allows it to reboot to your desired state without a connection to your core datacenter, so in the event of a failure of link to a central site workloads keep running, and if a pod were to fail, the control plane can ensure the desired state continues, even if there is no connectivity to the central/core site.

Select the single-node edge server topology when your edge site is very space-constrained or when there is limited bandwidth or intermittent connectivity between your edge and core datacenter sites.

Minimum requirements for single-node edge servers:

- One physical server with four CPU cores and 16GB of memory, and 120GB of disk space, running Red Hat Enterprise Linux CoreOS.

Speed edge deployment with validated patterns

To help you deploy applications at the edge faster and more easily, Red Hat offers validated deployment patterns. These patterns are detailed deployments created for different edge use cases. Predefined configurations bring together the Red Hat portfolio and technology ecosystem to help you rapidly implement your edge architecture. Validated patterns provide a foundational architecture that you can customize to meet your organization’s needs.

Red Hat validated patterns contain all of the code needed to build a proof-of-concept edge stack. All steps are fully automated via GitOps processes so you can deploy consistently and at scale. Finally, these patterns are continuously updated and tested against current product releases to reduce risk and deliver the latest capabilities.

Learn more about validated patterns for edge at redhat.com/en/products/edge/validated-patterns.

Build a consistent, flexible foundation for your edge environments

Each edge environment is different. Red Hat provides a portfolio of integrated platforms and tools to help you build the right foundation for your edge use case.

- Red Hat OpenShift is an enterprise-ready Kubernetes container platform that provides a consistent experience across your hybrid cloud, multicloud, and edge deployments. It allows developers to build, run, and manage applications using their preferred tools, processes, and locations — at the edge, on-site, or within your hybrid cloud.

- Red Hat Advanced Cluster Management for Kubernetes is a unified cluster and application management interface with built-in security policies. It provides a single, consistent view of resources from your core to your edge, so you can manage thousands of sites and clusters more simply.

- Red Hat Advanced Cluster Security for Kubernetes is an enterprise-ready, Kubernetes-native container security solution that helps you more securely build, deploy, and run cloud-native applications. It delivers complete life cycle security capabilities for Red Hat OpenShift.

- Red Hat OpenShift Data Foundation provides data and storage services for Red Hat OpenShift with simplified access, dynamic scalability, and a consistent experience. It can also be deployed on the same servers as your cluster for a hyperconverged configuration in the three-node cluster topology.

- Red Hat Application Services is a set of products for creating a unified environment for application development, delivery, integration, and automation. It includes frameworks, integration solutions, automation, runtimes, and programming languages for building fast, edge-optimized applications.

- Red Hat Ansible® Automation Platform is an enterprise framework for building and operating IT automation at scale, from hybrid cloud to the edge. It allows users across your organization to create, share, and manage automation — from development and operations to security and network teams.

Learn more

Red Hat can help you extend your business to the edge with platforms and tools that deliver consistency, manageability, automation, and simplicity across your entire environment. We also continue to work to meet the evolving needs of edge deployments.

Learn how you can gain more choice and flexibility at the edge: redhat.com/en/technologies/ cloud-computing/openshift/edge-computing

Together, Red Hat products and technologies form a common, flexible, scalable foundation that works across your entire hybrid cloud and edge environment.